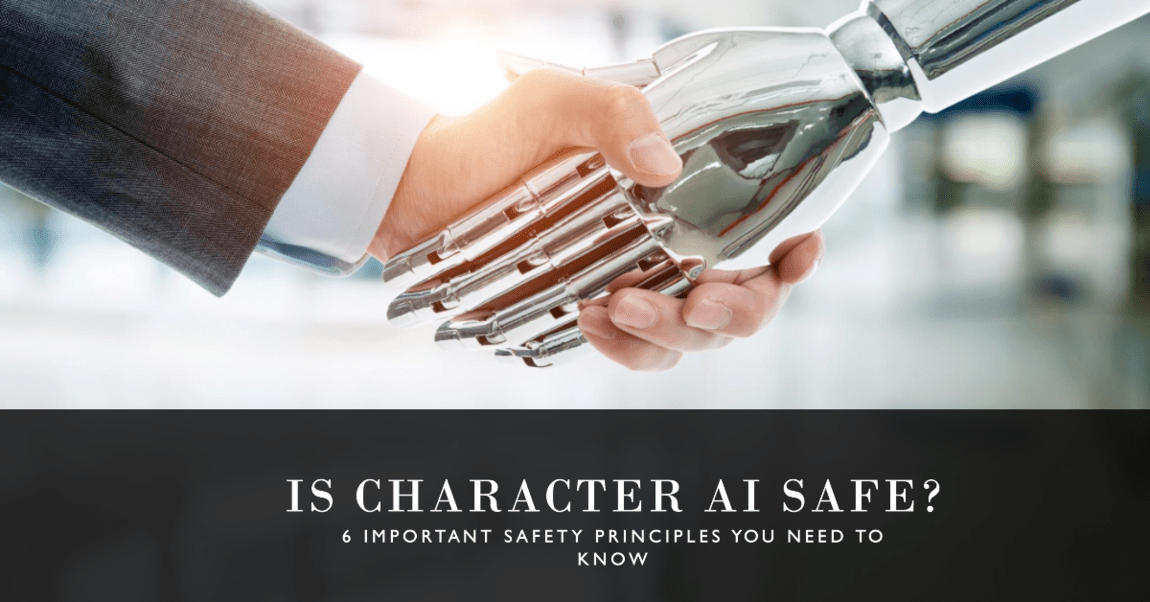

In this in-depth composition, we will explore the question “Is Character AI safe?” & claw further into the safety rudiments of Character AI (Artificial Intelligence), probing data protection safeguards, implicit hazards, & responsible exercise.

Artificial intelligence (AI) has opened up new possibilities in this age of technological growth, generating discussions & raising concerns about its safety & ramifications. Character AI (c.ai), an innovative application powered by Google’s LaMDA project, has evolved as an enthralling platform for interactive discussions with human-like text answers. Despite its popularity, several people have expressed worries about “Is Character AI safe?“.

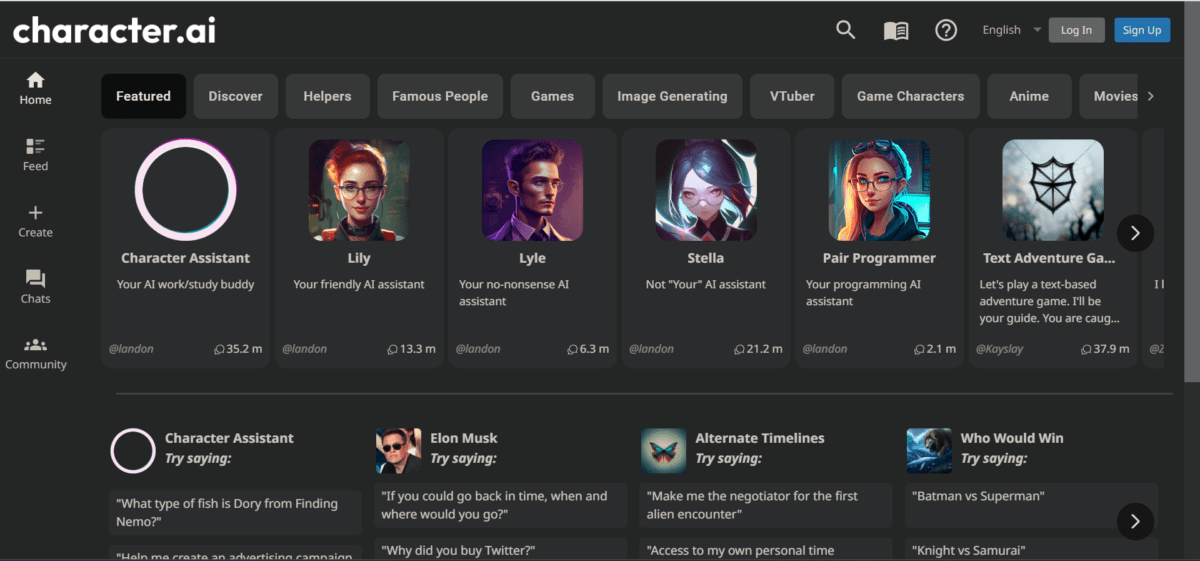

What is Character AI?

Character AI (or c.ai), developed by Noam Shazeer & Daniel De Freitas, creators of Google’s LaMDA projects, offers users the unique opportunity to interact with an intelligent chatbot. Launched as a beta version in September 2022, Character AI has gained immense popularity due to its ability to generate realistic and captivating conversational experiences.

Character AI is a neural language model chatbot service that excels at generating human-like text (human written) responses & engaging in contextual conversations (step by step conversation). Users can create “characters,” craft their “personalities,” set specific parameters, and publish them for others to interact with. Additionally, users can chat with fictional, historical, & celebrity figures created by other users.

While Character AI provides a more human-like output compared to other AI chatbots, it also has some limitations. Creating truly original and creative characters can be challenging, and the AI’s responses can sometimes be nonsensical or incoherent. Moreover, there is no way to prevent users from creating Not Safe for Work (NSFW) content.

Also Read: Exploring The Innovative Synergy: tex9.net Crypto & tex9.net Computer Chip

Safety Spectrum: Is Character AI Safe?

Character AI is widely regarded as a secure and dependable platform. Users have faith in the website’s comprehensive security measures, transparent terms of service, and privacy policy. It is important to note, however, that Character AI, like most AI chatbots, has access to user conversations and personal data. This information is mostly used to improve service quality and give consumers with more personalized replies. The platform ensures that user data is never shared with other parties unless needed by law or to prevent fraud. So answer for “Is Character AI safe?“, we will discuss about its potential misuses first.

Character AI, despite its safety safeguards, provides several possible problems that must be addressed:

Impersonation

Character AI (c.ai) may be used to construct lifelike chatbots that imitate actual individuals, allowing disinformation or fraudulent activities to proliferate.

Misinformation

AI (Artificial Intelligence) may create language that is factually wrong or deceptive, thereby affecting people’s opinions & spreading false information.

Deepfakes

Character AI (c.ai) might be abused to make deepfakes, which are edited films or audio recordings that seem authentic, causing reputational harm or the spread of disinformation.

To reduce these critical dangers, users should approach the platform with caution, & developers must provide strong security measures & educate users about the possible threats.

6 Important Safety Principle for Character AI

- Privacy by Design: When designing and developing a platform, developers should prioritize user privacy and data protection.

- Informed approval: Before utilizing the site, users must be well-informed on how their data will be handled and express explicit approval.

- Transparent Policies: The platform’s terms of service and privacy policies should be transparent, describing data collecting, usage, and sharing practices.

- Content Moderation: To avoid the spread of dangerous or misleading material, effective content moderation techniques are required.

- Reporting methods: Users should have easy access to reporting methods that allow them to flag abusive or improper content.

- knowledge and Education: Raising knowledge of potential dangers and educating consumers on proper usage are critical steps in reducing potential damage.

Also Read: How Autonomous Artificial Intelligence is Shaping Our Powerful World?

Character AI’s Privacy Policy

The privacy policy of Character AI defines how the platform gathers, utilizes, & distributes user data. To improve the AI’s performance, personal information such as name, email address, IP address, & conversation data are gathered. However, the site guarantees users that their data will not be shared with other parties unless necessary by law or to combat fraud. Character AI (c.ai) leverages SSL encryption to further secure user data. By the proper look on Privacy Policy, We clearly seen the proper answer for our main question Is Character AI safe or not?

Addressing NSFW Content

To address concerns about NSFW (not safe for work) content, Character AI has implemented a solution that redirects such messages to a public room when the NSFW checkbox is activated. This special feature allows users to avoid potential exposure to explicit content, promoting a safer user experience.

Mobile Usage and Data Storage

Character AI (c.ai) expands its security features to mobile users by making the platform available through mobile browsers. But, it is now working on a mobile app. In terms of data storage, Character AI keeps user conversation data to improve the AI’s performance. While this improves the conversational experience, it presents privacy and security issues since critical information disclosed during chats may be retained and retrieved outside of the immediate context.

Final Thought: Is Character AI safe or not?

Character AI(c.ai) is an instigative platform for AI-powered conversational adventures, but is Character AI safe? users must prioritize safety, privacy, & ethical operation. Responsible operation, transparent programs, & user allowance are essential safeguards to help against possible dangers. inventors must continually refine security measures & educate users about the platform’s capabilities & limitations. By sticking to ethical practices, users can enjoy the benefits of Character AI(c.ai) responsibly while minimizing implicit injury in the conversational period of AI (Artificial Intelligence).

Also Read: Safety vs. Freedom: Is Freedom GPT safe?

FAQ’s

Can Character AI chats be leaked?

Yes, Character AI chats can be leaked. The platform does not encrypt chats, making them potentially accessible to the company’s employees. There have been reports of glitches in the past where user chats were leaked to the public.

Does Character AI track you?

Yes, Character AI (c.ai) monitors user dialogues & gathers data such as text inputs, character interactions, and interaction duration & frequency. Technical information such as the user’s device, IP address, browser type, & operating system are al so gathered. This information is used to develop the platform & provide users with more personalized experiences.

Does Character AI take my data?

Yes, Character AI (c.ai) collects personal information, technical data, & chat data from users to enhance the platform’s performance and provide personalized experiences. Users can opt out of some data collection practices by clearing cookies & using private browsing, but this may limit access to certain features.